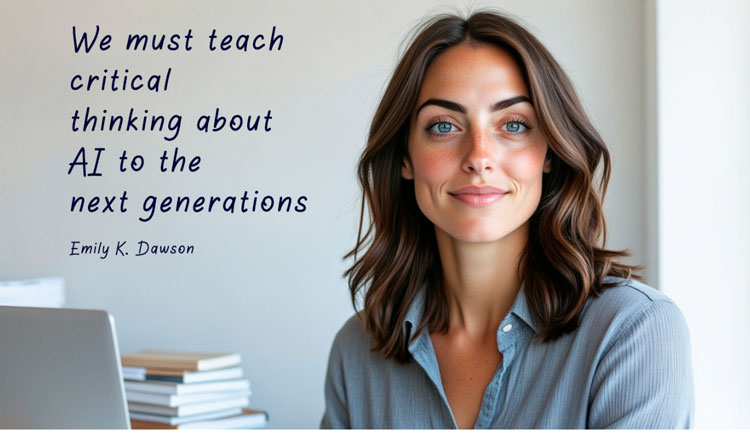

Emily Katherine Dawson has emerged as a leading voice in technology journalism, exploring the intersections between artificial intelligence and daily life. Born in Austin, Dawson holds a Bachelor’s Degree in Journalism and is the mind behind books such as AI in Daily Life: Your AI to AI and The Future of AI: Successes, Challenges and Ethical Dilemmas.

Question. How did you first become interested in technology journalism?

Emily Katherine Dawson: It piqued my interest in college. I realized how technology was woven into everyday life. Journalism became my avenue for exploring and explaining these impacts to a broader audience.

Q. Why specialize specifically in artificial intelligence?

EKD: Artificial intelligence is fascinating. It’s not just another technological advancement; it challenges humanity ethically, intellectually, and socially. Specializing in AI helps me explore these multifaceted issues and communicate their significance effectively.

Q. How would you define artificial intelligence for a general audience?

EKD: Simply put, AI refers to computer systems designed to perform tasks that typically require human intelligence, like learning data, recognizing patterns, and making decisions or predictions.

Q. What’s the biggest misconception people have about AI today? EKD: Some people view AI as entirely autonomous or even sentient. Nowadays, AI relies heavily on human programming, biases, and oversight.

Q. You mention biases in AI; why is this significant?

EKD: AI biases are significant because these systems mirror human prejudices embedded in the data they’re trained on. Unchecked biases can perpetuate discrimination and inequality, causing real-world harm to marginalized communities.

‘Q. What inspired your book, AI in Daily Life: Your AI to AI?

EKD: I realized many people don’t notice how AI influences their everyday decisions. This book aims to shed light on these hidden influences and make people understand and navigate them.

Q. Can you share a surprising example of AI in everyday life?

EKD: It’s something we see daily, but we don’t realize what’s going on. Streaming platforms make suggestions that aren’t random at all; they’re driven by sophisticated AI algorithms that analyze your viewing habits to predict what you’ll enjoy next.

Q. You’ve also written about ethical dilemmas. What’s the most pressing ethical challenge facing AI?

EKD: Data privacy and consent are the biggest ethical challenges we are facing nowadays. As AI relies on personal data, ensuring transparency about how data is collected and used becomes critical to protect individuals’ rights and freedoms. It’s something I’ve advocated from my own social media.

Q. How can we effectively regulate AI without hindering innovation?

EKD: Effective regulation requires a balanced approach, emphasizing transparency, accountability, and ethics without stifling creativity or technological advancement. Clear standards and flexible frameworks can protect society while supporting innovation.

Q. Are current regulations sufficient in addressing AI’s rapid growth?

EKD: No, they aren’t yet sufficient. Technology evolves rapidly, often outpacing regulatory responses. Policymakers must act proactively to bridge this gap and ensure regulations keep pace with technological advancements.

Q. What role does education play in addressing these challenges?

EKD: Education is absolutely critical. A well-informed public can better advocate for responsible AI policies, understand its impacts, and demand ethical standards from creators and corporations alike.

Q. How early should education about AI begin?

EKD: Ideally, introducing foundational concepts of AI and technology ethics should begin in elementary school. Early education helps foster critical thinking and technological literacy from a young age.

Q. What would a curriculum on AI include?

EKD: An effective curriculum should encompass AI fundamentals, ethical considerations, data privacy, and hands-on experience with basic coding or data analysis to build practical skills alongside theoretical understanding.

Q. Why do you believe we must educate new generations in critical thinking about AI?

EKD: We must educate new generations in critical thinking about AI because they will be the ones living, working, and governing in a world increasingly driven by technology. It’s not enough to just use technology; future generations must understand its implications, question its ethical boundaries, and be empowered to shape AI responsibly. Without fostering critical thought, we risk creating passive consumers rather than active participants in determining AI’s role in our society.

Q. You’re multilingual; how does your Spanish proficiency benefit your journalism?

EKD: Speaking Spanish has broadened my perspective and allowed me to engage diverse communities, making the discourse on AI inclusive.

Q. Do you believe global collaboration is necessary for AI ethics?

EKD: Yes. AI transcends national borders, and global collaboration is essential to create cohesive ethical guidelines and standards that ensure the technology benefits humanity universally and equitably.

Q. What’s your primary goal as a technology journalist?

EKD: My primary goal is to empower people with knowledge about technology, enabling them to make informed decisions and advocate for ethical, inclusive policies that harness AI for the greater good.

Q. Could AI ever fully replace journalism?

EKD: While AI can automate certain journalism tasks, such as data collection and fact-checking, it cannot replicate the nuanced human judgment, empathy, and critical thinking essential to high-quality journalism.

Q. Who inspires you in your field?

EKD: I’m particularly inspired by Kate Crawford and other scholars who rigorously analyze technology’s broader social impacts, advocating for thoughtful and responsible technological integration.

Q. How do you stay updated with rapid AI developments?

EKD: I stay updated by continuously researching academic literature, attending industry conferences, and engaging regularly with a network of professionals and researchers within the AI community.